Deep Learning

Deep Learning note

sigmoid and tanh

If I have to predict among 2 categories, it’s better to use a sigmoid function. However, A softmax function is mostly used with more than 3 categories.

Sigmoid and Tanh essentially produce non-sparse models because their neurons pretty much always produce an output value.

It can be occured a vanishing gradients problem.

Relu

Relu function is more faster and has less problem with vanishing gradients problem.

Cross Entropy

Cross Entropy is usually used with one-hot encoding.

Gradient Desent

Batch Gradient Descent is too slow to calculate loss value.

Stochastic Gradient Descent is using a part of whole batches.

Local minima problem can be fixed by using SGD. However, It cannot be 100% accurated.

specify the bounding boxes

y = [1 bx by bh bw 0 0 0 ~]^T

bx and by have to be between 0 and 1. (x,y) cordinate needs to be in grid cell.

bh and bw could be more than 1. (height, width) can be greater than gird cell.

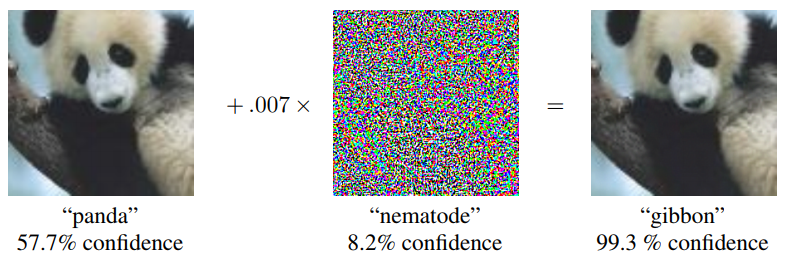

Adversarial Attack

An adversarial attack consists of subtly modifying an original image in such a way that the changes are almost undetectable to the human eye. The modified image is called an adversarial image, and when submitted to a classifier is misclassified, while the original one is correctly classified.

(https://medium.com/onfido-tech/adversarial-attacks-and-defences-for-convolutional-neural-networks-66915ece52e7)

https://arxiv.org/abs/1412.6572