[raspi]Self-Driving

Self Driving (code)

First Try

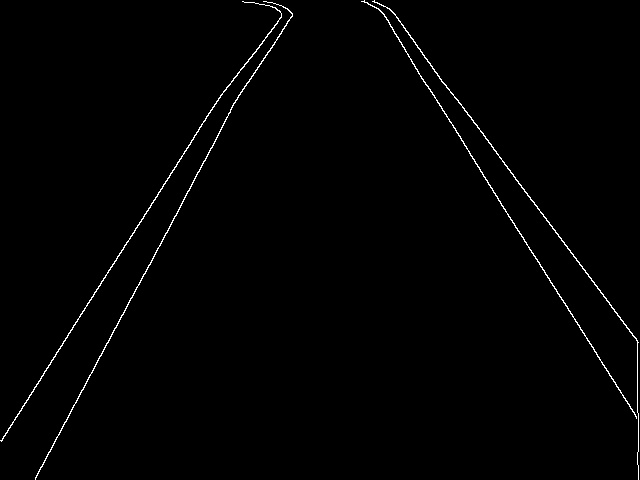

I tried to use a camera to detect the tape on the floor so that the machine can measure which direction has to be selected.

First, I used a canny edge detector to change the image and then used a hough line transform to extract the feature of the line on the image.

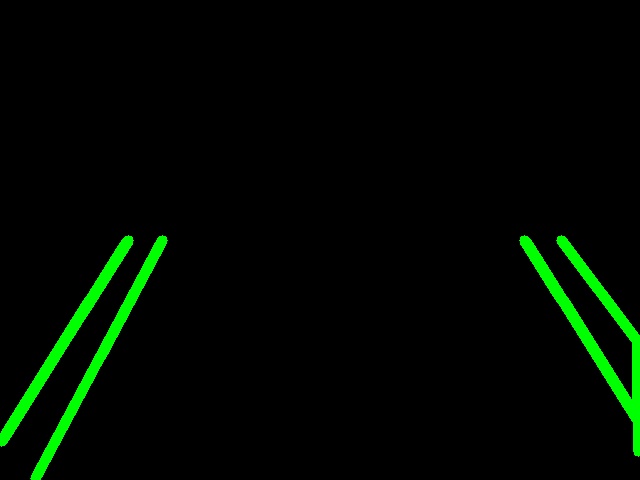

And then, the only region I wanted to detect was half of the image’s height. So I combined the original image with the image of the lines that are already detected.

Lastly, the Raspberry Pi will calculate # of left lines and right lines in order to move correctly.

If there are more left lines than right lines, the car will move to the right side. (The accuracy was not that bad, but I didn’t have enough power to move all 4 of the motors. So it moves a little weirdly)

But I wanted to use deep learning with Raspberry Pi, so I tried to change the way.

Second Try

I’ve taken plenty of car course pictures (approximately 2000) to make the machine learn by using deep learning.

First, a ps4 controller was used because the steering angle could be obtained in a wider range rather than using a keyboard. I could get values of angle between -0.5 and 0.5.

And then, I coded with OpenCV to save the pictures and the steering angle in X, y lists while the car moving. I made the machine be trained with these X(images), y(steering angles) datasets.

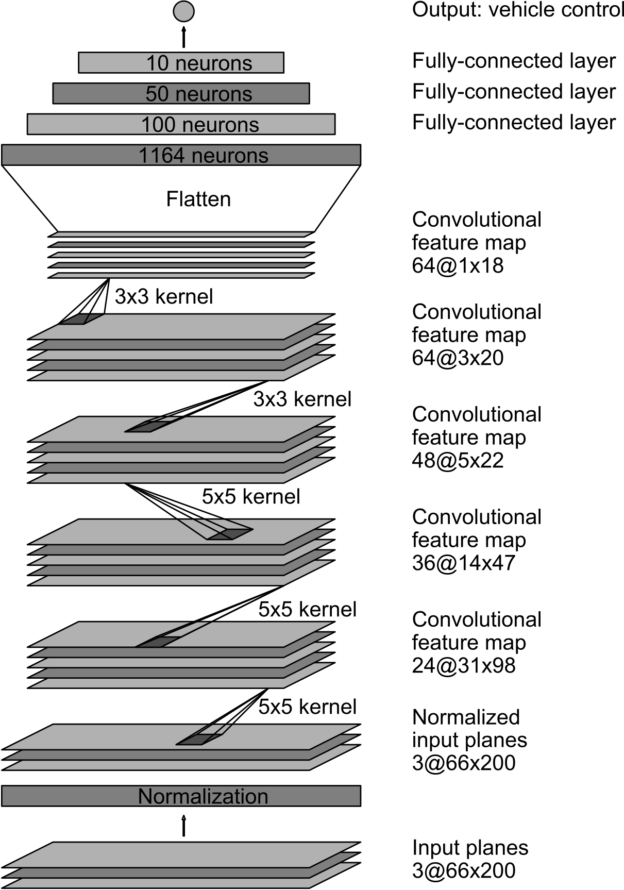

I used an NVIDIA network architecture to train my model.

But my model’s accuracy was only around 50%. I figured out the images and steering angle was not the right time.

The problem was there was a delay between saving pictures and putting steering angles to X, y lists.

So I changed the plan.

Thrid Try

My Raspberry Pi’s SD card was corrupted, so I had to format my SD card and re-install the OS. It took time to install OpenCV and other libraries again.

This time, I tried to classify left, right, and forward in order to get better accuracy.

I used the same architecture as an NVIDIA architecture.

Last 3 results

- loss: 0.3880 - acc: 0.8750 - val_loss: 0.3680 - val_acc: 0.8875

- loss: 0.3339 - acc: 0.8969 - val_loss: 0.3208 - val_acc: 0.9250

- loss: 0.3318 - acc: 0.9062 - val_loss: 0.5883 - val_acc: 0.7250

I got around 85% of accuracy, 80% of validation accuracy, and 0.35 of validation loss.

However, I tried to use TensorFlow in my Raspberry Pi to predict the direction of the car.

It was too slow to load the model and predict the value, so I decided to use my computer due to the fact that my Raspberry Pi is too slow to use deep learning.

Fourth try

I had been studying socket in order to send & receive data from computer to Raspberry Pi in both ways.

Every frame on Raspberry Pi will be sent to the computer by using a socket. Then saving images and steering angle in X, y lists on the computer.

I’ve tried with around 1000 pictures. However, I had something wrong with the pi-camera. So I had to try another way.

Fifth try

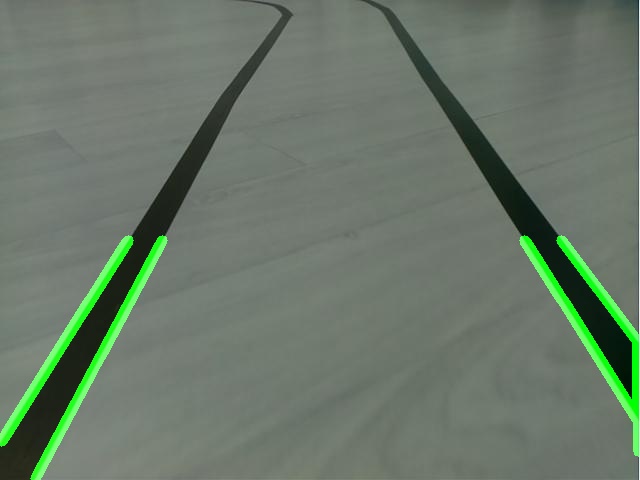

I watched one of the videos that is about self-driving. One of the YouTubers(Murtaza workshop) put white paper on tape so the difference between the floor and the tape will be more clearly seen.

I’ve got approximately 70% of accuracy but still, it needs to be higher accuracy in order to not move out of the lines.

I have to adjust the data by myself then it will get higher accuracy.

It worked pretty well.